Conditional GAN

Conditional GAN

Model

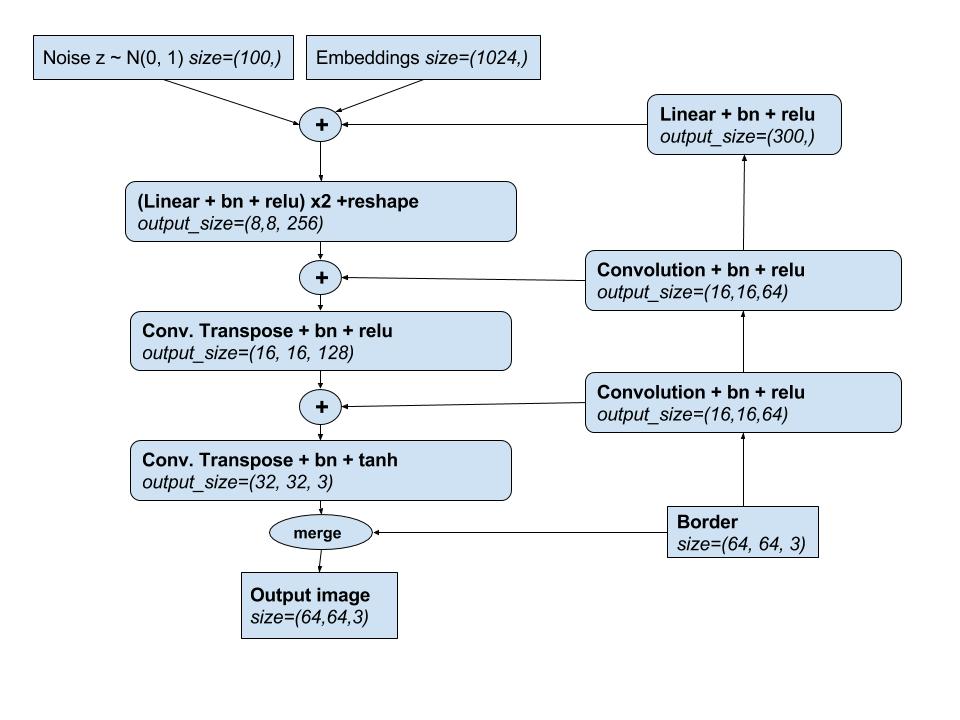

Here a schema of the generator architecture :

The different models are defined in model.py.

I choose to work with a DCGAN architecture to try to learn the image manifold. Using GAN as a generative model have the advantage of producing sharp images, unlike variational auto-encoder for example.

I wanted to learn the tensorflow library. This library is actively maintained by Google, and is on the way to be a good buisness solution for market product deep learning application.

I started with a working implementation of a DCGAN that I found on github.

To test it on our task, I first trained it to generate full 64x64 images without conditioning. I didn’t get good results, the MSCOCO images having great variance, the models didn’t show promising results.

Conditioning

Border

The first condition is the border. The network must learn to integrate the border information as :

- a scene description, the border must give hints on the content and the structure of the image.

- a continuity constraint on the reconstructed 64x64 images.

I use convolution on the border with skip connection to the same size deconvolution layer, where I concatenate the channels.

The output of the convolution layers is flatten and concatenate to the initial noise and the captions embeddings.

The discriminator is not conditioned on the border, instead, the border and the center are merged before entering the discriminator.

Captions

The captions are first preprocessed into embeddings into a 1024 dims vector space. I have used a model I found on github trained on the same dataset, MSCOCO. In this model, images and sentences are mapped into a common vector space, where the sentence representation is computed using LSTM.

The captions embeddings are concatenate with the noise vector in the generator, and in the discriminator, it is concatenate just before the MLP layers.

Training

I have trained the model with Adam optimizer with a learning rate of 0.0002 and the beta1 parameter set to 0.5.

Discriminator loss

Here the loss of the discriminator:

Ld = 0.5*Ld_real + 0.25*(Ld_fake_captions + Ld_generator_imgs)

Ld_realis the loss of predicting the accept class for image taken from the training set and a random linear combination of his associated captions. I have add some label smoothing (try to predict 0.9 +/-0.1).Ld_fake_captionsis the loss of predicting the refuse class for image taken from the training with a random caption embedding.Ld_generator_imgsis the loss of predicting the refuse class for image generated by the generator.

Generator loss

The generator is trained to fool the discriminator, to predict the accept class with his generated images.

GPU

I have use Amazon instance to train my models. I used p2.xlarge instance, which are equipped with NVIDIA K80 GPU. I trained this model for 10 epoch for 2 days.

Training set

The model is train on a training set made of 110000 examples. I have merged the training set and 99% of the validation set. I have done that because I don’t have a performance metric, so I will evaluate the model with my eyes. So I can do it with only 4000 examples.

Each example is made of:

- An image of 64x64 pixels with three colours channels

- A list of captions embeddings of variable dimension (n, 1024) where n is the number of captions associated with the image (5).

I have build an asynchronous input pipeline using tensorflow tf.Examples binary format, to leverage all the import code in the computation graph.

You can find the dataset

This give two major advantage :

- The dataset is then a set of 60 tfrecords files, each files containing 4096 examples. The dataset is space efficient and fairly simple to manipulate.

- The extraction of the examples can be defined directly in the computation graph, with a queue system. The examples are fed in the queues by threads.